Topher Collins

Data Analyst and Data Scientist

Proficient in Python, R, SQL, TensorFlow and Scikit-learn

Dungeons & Dragons nerd, founder of DnDwithToph.com

View My LinkedIn

View My Articles on Medium

Fine-tuning LLM for RPG Statblocks > Go to project

Aim

This project aims to use the Llama 3 8B LLM to create a fine-tuned task specific model for creating Dungeons & Dragons monster statblocks in a structured style and format.

Data

The original monster data can be found at D&D Monster Spreadsheet

The processed data for fine tuning can be found at D&D Monster Hugging Face Dataset

Process

- We simplied the dataset, removing features that aren’t required such as ‘Author’, ‘Url’, & ‘Font’, and some because they wouldn’t teach us anything more about our model.

- Randomly selected 20 samples to produce our baseline model.

- Produced a prompts column for each sample through a combination of manual writing and LLM generation.

- Converted our data into format for fine-tuning: instruction (prompts), input (empty strings currently, but could be used for websites or specific stats), & output (monsters data in desired structure).

- Pushed the dataset to Hugging Face for integration with Hugging Face datasets API.

- Set up a Google Colab environment with Torch, Unsloth (for efficient fine-tuning), Xformers, and all other packages.

- Set up Unsloth’s Llama3 8b for base model and LoRA adapters for fine-tuning.

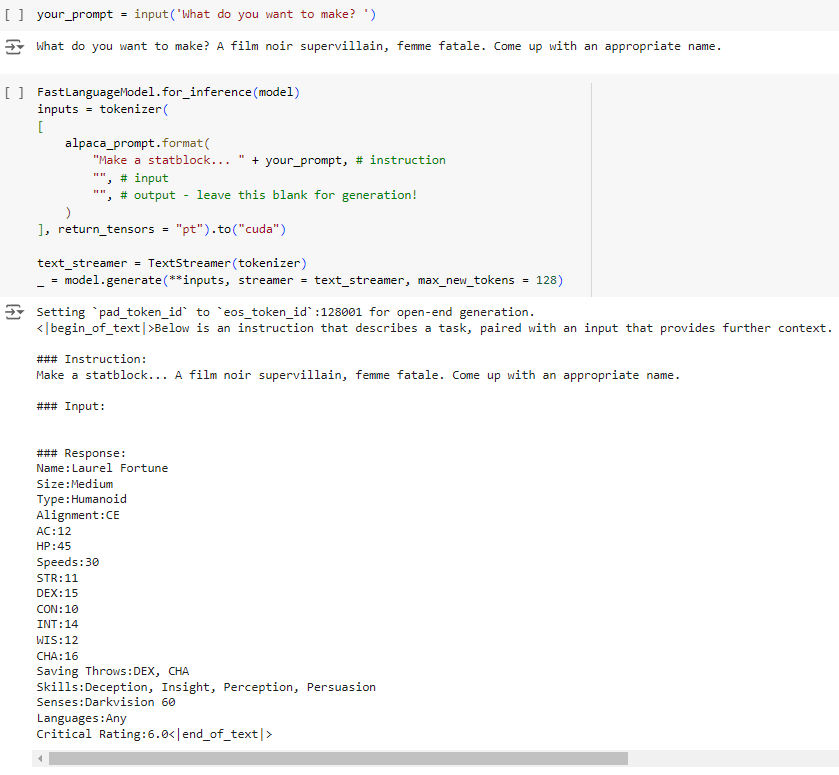

- Trained our model and tested on simple prompts (with no specific) and targetted prompts (requiring specific info included in statblock).

- Saved our model locally and pushed LoRA adapters to Hugging Face.

Conclusion

Our output results consistently followed the desired output format. As such, we could easily take the model outputs and process them for further needs, such database storage or provding directly to a user. There were some signs of overfitting, which is understanble with our very small fine-tuning dataset. In particular the ‘creativity’ of the model seems limited, often producing a name that is extremely uniform and basic.

Model LoRA adaptors can be found and used here